Sorry, But Humanity Can’t be Coded

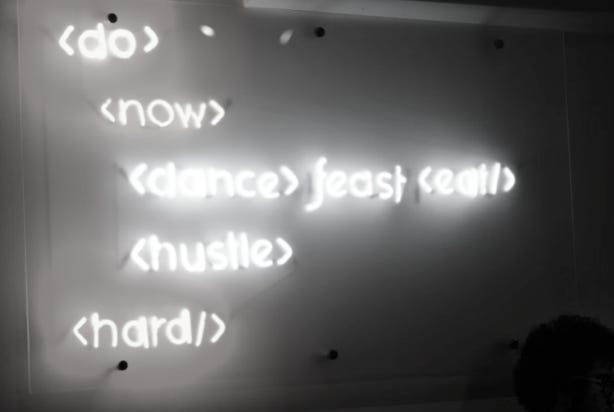

Why our messy, beautiful cultural habits resist being reduced to clean lines of computer code. Culture can’t be coded.

The dark room in Silicon Valley, lit only by the glow of screens and the flashing lights of the server racks and a few dimmed pot lights thrummed quietly. Suddenly the voice of the lead architect squeaked loudly, “We’ve got it!”. Now they could predict at least six months ahead what cultural trends emerge. The room erupted in applause, the energy vibrating them all.

Thousands of miles away, in a small village in Oaxaca, a grandmother taught her granddaughter to weave patterns into a blanket. Patterns that had evolved over centuries, deeply rooted with cultural symbols. Ones that no data point could capture. As the child’s fingers worked the threads, a new pattern emerged. One that would subtly transform their village’s artistic traditions and meanings. But that algorithm in Silicon Valley had a blind spot. It could never have detected this pattern.

There are those thought leaders and thinkers, often coders, who believe that human culture can be reduced to algorithms. As I’ve often said, that is culture on a diet. It is a fallacy of Silicon Valley to think it is possible. It is not. Here is my view.

It is not that we can’t codify some aspects oh human behaviours or elements of culture, it’s just not all of them can be. I am not anti-AI, far from it. We just need to cut through the coders hype.

The ultimate arbiter of technology is always culture. A new technology may arrive and change a culture, but in the end, the culture will force the technology to adapt to it in new ways. This has been so since we first started using stones as tools.

You might, rightly be thinking, “ah, but Artificial Intelligence is different because it’s a cognitive technology.” I don’t think so. I’ll explain that a little more below.

The word culture itself is incredibly complex and varied in its meanings. It includes the aesthetics (art, literature, music) but also how we govern ourselves, militaries, sociocultural systems. It operates through complex, symbolic structures that resist simple binary coding. Algorithms operate only on the surface level of human culture, they do not penetrate it.

All cultures emerge from countless interactions, constantly morphing, shaped by us and by the complex world around us in nature. Culture does not exist at the level of individual interactions. We spin webs of significance which is where we embed our cultural symbols.

What Silicon Valley idealist coders think they can do is to impose technical frameworks that strip away the richness of the human experience. This is, essentially, Occam’s Razor taken too far, where oversimplification becomes oversimification.

This is viewing humanity through a machine perspective, a lens of computational processes rather than what humans are; embodied, culturally-situated beings.

So how does culture resist this attempt to encode it? These are, I think, the main facets that are, fundamentally, irreducible.

It starts with tacit knowledge. As philosopher and polymath Michael Polanyi observed, “we know more than we can tell.” Our cultural knowledge is vast. In a world where cultural transmission is happening faster than in human history, this tacit knowledge becomes even more complex.

Then there is the aesthetic aspect of culture, it is about experience. Art, architecture, literature, music, philosophy. These are subjective and qualitative. Such appreciation and understanding is beyond being codified. Perhaps this is why so much AI generated “art” has us cringing. It causes us to step back, much like the uncanny valley. Machines cannot experience like humans do, so how can aesthetics be encoded in a binary?

In all human cultures and societies, ethical reasoning is foundational to how they function. Ethical judgement involves contextual wisdom that algorithms cannot replicate.

Culture is a complex adaptive system with properties of self-organisation and adaptation. These emerge from the system as a whole. They cannot be programmed in.

Tactics can be codified, but strategy requires cultural judgement. And culture operates at the level of strategy, forming the background assumptions that then make tactics meaningful. Culture represents what in philosophy is called an “irreducible whole.”

Within culture are what we term “nested hierarchies of meaning” through symbols as well as norms and traditions. Take a wedding ring for example. It carries meaning through its connection to traditions, economic exchange systems (reciprocity), religious systems, personal relationships, social signalling and many more. These cannot be coded.

Culture is always recreating itself. How often do we hear references like “that’s not the way my grandparents did things…” When a society adopts a new technology it doesn’t simply cause predictable outcomes, but reshapes the cultural system that then influences how the technology is used and reshapes the technology itself.

Alexander Bell invented the telephone so people could listen to opera music. Culture adapted the telephone so people could talk to each other. This could not have been predicted nor encoded.

Culture too, has an embedded temporal dynamic. Cultural meaning accumulates through what anthropologist Marshall Sahlins called “the structure of the conjecture”, where present actions and views derive their meaning from past events. This requires lived experience. That too, cannot be coded.

Sociologist Pierre Bourdieu’s concept of “habitus” is the idea that culture is also our embodied history. That we carry cultural knowledge in our bodies, not just our minds. Algorithms can’t “get” this embodied dimension of cultural memory.

Algorithmic approaches are inherently limited. They may identify patterns in cultures, but they cannot access the meaning of those patterns. Understanding requires interpreting meanings, not recognising patterns.

Then there’s contextual sensitivity. A raised eyebrow or a smile means something different across cultures. And those meanings are constantly evolving. Algorithmic predictions will always fail to capture cultural innovations.

we might code for the tactical elements of culture, we may find patterns in behaviours and psychology, but even then, this is limited. It is one of the reasons that I think we will not arrive at Artificial General Intelligence (AGI) anytime soon. We may even have the concept of superintelligence wrong as well.

Humans are complex social animals. Computer scientists are a very clever lot indeed. But so are anthropologists (okay I’m biased here), sociologists, psychologists, musicians and writers. As long as coders ignore the humanities however, how can they possible assume to think they can code humanity when they don’t even understand it. Nor do they seem to want to. Bit of an oxymoron isn’t it?